Computing technology has been evolving rapidly in America and Britain for the past few decades. Although development of computers can be traced back to as early as 1800 with Charles Babbage’s mechanical computer, the Analytical Engine, it was only during World War II that the technology started to gain attention.

In America, electronic computers were first developed in the 1940s by companies such as IBM and Bell Labs. These early computers were bulky and expensive, but they paved the way for modern computers as we know them today. In contrast, Britain’s early computing industry was largely driven by academia rather than industry. Researchers such as Alan Turing at Bletchley Park played a crucial role in developing code-breaking machines that helped win World War II.

During the 1950s and 60s, both America and Britain experienced a boom in computer development.

Key innovations from each country

In the last century, computing technology has changed drastically, and both America and Britain have been major contributors to the evolution of this industry. The first breakthrough in computing technology was with the invention of the thermionic valve (vacuum tube) in 1904. This innovation paved the way for more advanced computer systems that later emerged.

Then came the invention of the Colossus computers by British telephone engineer Tommy Flowers during World War II. It was used by Allies to break German codes. Later, in 1951 Remington Rand introduced its UNIVAC I computer – which is considered as one of the most significant innovations in American computing history because it was an early example of a stored-program computer.

Another innovation that stands out is ARPANET (Advanced Research Projects Agency Network), a US Defence Department project from 1969 that created a network between several universities and government sites to share academic and research data more efficiently.

Differences in development and production

The development and production of computing technology have been central to the evolution of modern societies. The United States and Britain are two countries that have experienced remarkable progress in this field, but there are significant differences between the two. A primary reason for this has been the contrasting approaches taken by each country towards innovation, research, development, and funding.

In America, technological innovation has been encouraged through a market-driven approach where companies are incentivised to develop new products based on consumer demand. This approach has resulted in greater investment in research and development for computer hardware and software products such as personal computers, video games consoles, mobile phones, tablets and others.

In contrast to America’s market-driven approach towards innovation is Britain’s emphasis on academic research which focuses on developing fundamental technology that can be used by organisations across many industries.

Prominent figures in American and British computing industries

The computing industry has evolved rapidly over recent years with several prominent figures playing a pivotal role. These individuals have made significant contributions to the field, and their work continues to impact society.

One such figure is Bill Gates, the co-founder of Microsoft. As a pioneer of personal computers, he’s revolutionised the industry by developing user-friendly software. His leadership skills and innovative ideas propelled Microsoft to become one of the most successful tech companies of all time.

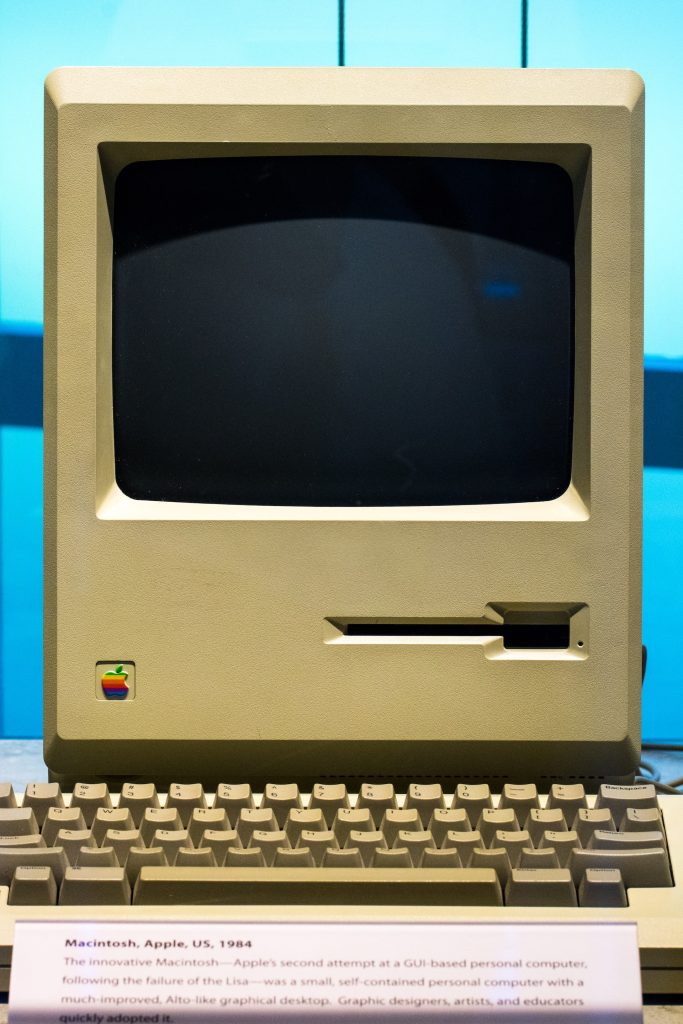

Moving to the competition, the co-founder of Apple, Steve Jobs, has made a major contribution to the computer industry that cannot be overstated. He was instrumental in developing personal computers through his innovative designs and marketing strategies but also played a significant role in driving the development of desktop publishing, digital music players, smartphones and other devices that are widely used today.

Another significant figure is Tim Berners-Lee, a British computer scientist who invented the World Wide Web (WWW) while working at CERN in Switzerland. His invention transformed how we access information and made it possible for people all over the world to communicate with each other more easily.

Comparison of current status of computing technology in both countries

The technological advancement of computing technology in America and Britain has been a topic of debate for years. Both countries have made significant strides in the development and deployment of advanced computing technologies, but their current status and approach to technology are quite different.

In America, tech giants such as Apple, Google, and Microsoft dominate the market with their cutting-edge hardware and software solutions. The country is also home to Silicon Valley – the hub for innovation in technology where start-ups thrive. On the other hand, Britain’s approach to technology is more research-based with investment focused on R&D programs. In recent times, there has been an increase in tech start-ups but they are still far behind what America boasts.

In terms of infrastructure, both countries have access to broadband services almost everywhere, however, the United States is ahead of Britain when it comes to high-speed internet access. According to a recent study, around 97% of Americans have access to high-speed internet compared to only 95% of Britons. However, when it comes to cybersecurity and data protection laws, Britain is considered more advanced than the US due to its strict regulations and focus on privacy.

Innovation, competition, and market trends

Both America and Britain have played important roles in computing technology, with their respective companies leading the way in developing new products and services. Competition continues to drive progress forward, forcing companies to constantly improve and innovate.

One of the biggest trends in recent years is the rise of cloud computing. This technology allows businesses to access data and software over the internet rather than relying on physical infrastructure. Companies such as Microsoft, Amazon, and Google have made significant investments in cloud computing services, creating fierce competition for market share. In addition to these major players, smaller start-ups are also making strides by offering specialised cloud solutions for niche markets.

Another emerging trend is the increase in use of artificial intelligence (AI) and machine learning (ML) technologies.

How advancements have impacted society differently in each country

Computer technology has impacted society differently in America and Britain.

In America, the rise of Silicon Valley led to the development of personal computers, that transformed the way people work and communicate. Smartphones also had a major impact on American society. Americans now rely heavily on mobile devices for everything from entertainment to communication.

In Britain, the advancement of computer technology has impacted society differently. The country is leading the way in artificial intelligence research, including prominent universities such as Cambridge and Oxford. Thanks to this increased focus, there has been significant developments in both healthcare and finance, with AI being used extensively to improve these sectors.

Additionally, British banks have invested heavily in online banking services that are accessible through mobile devices, making it easier for people to manage their finances remotely.

The future of computer technology in America and Britain

As we head into the future, it’s clear that computer technology will play a major role in society in both America and Britain. This is especially true as we continue to see advancements in artificial intelligence (AI) and machine learning. In fact, some experts predict that AI will become more intelligent than humans, which could have significant implications for how we live our lives.

Another area where we’re already seeing the impact of computer technology is in healthcare. Doctors are using AI to make more accurate diagnoses and treatment plans, while patients can use wearable devices to monitor their own health at home. Virtual reality is also being used to train medical students and provide therapy for mental health conditions. As these technologies become more widely available, they’re likely to improve our overall quality of life by enabling us to stay healthier for longer.